BIGAI

BIGAI, Peking University

BIGAI

Peking University

BIGAI

Peking University

BIGAI

BIGAI, Peking University

Transactions on Robotics

If you have any question, please contact jiaoziyuan at bigai dot ai.

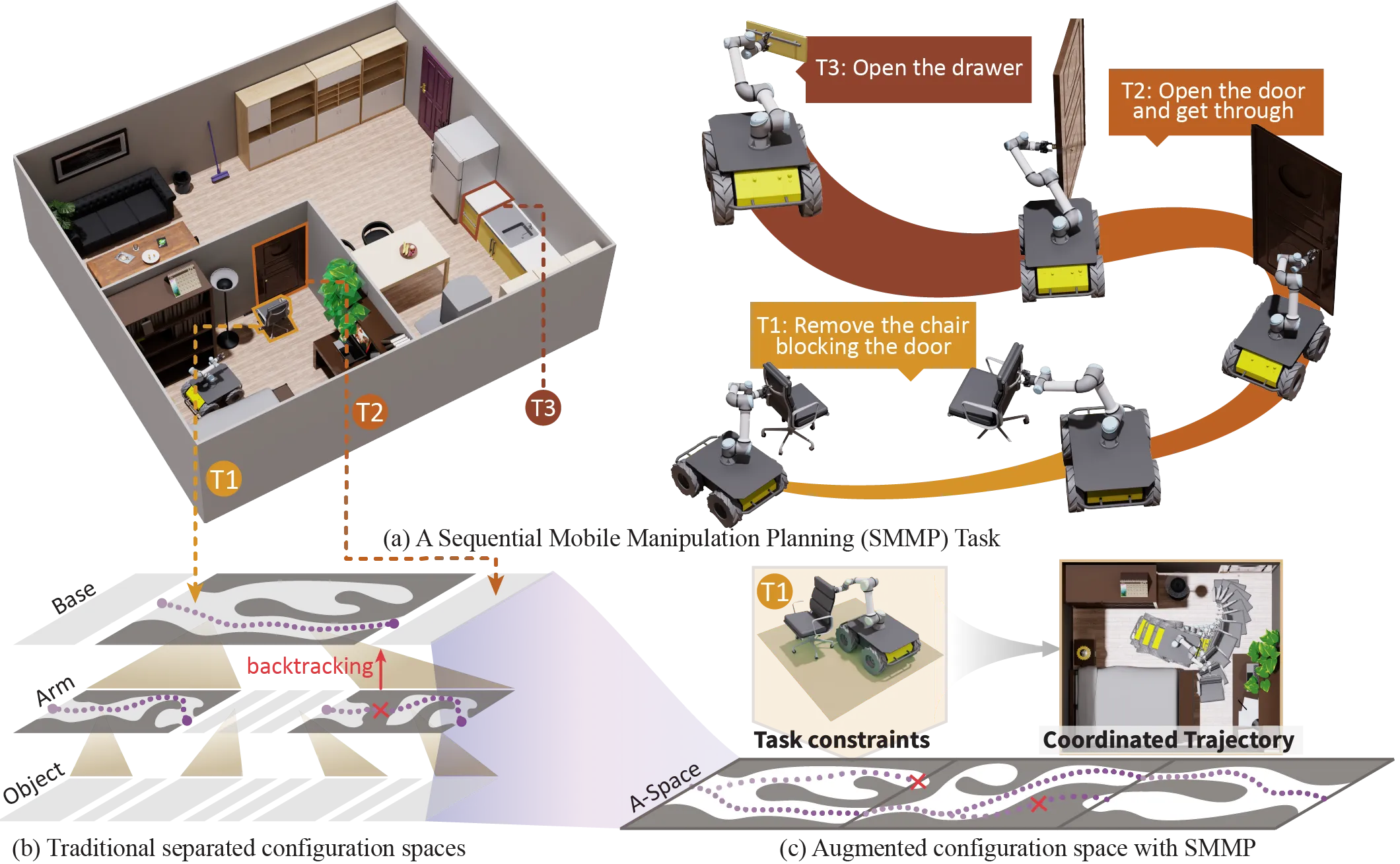

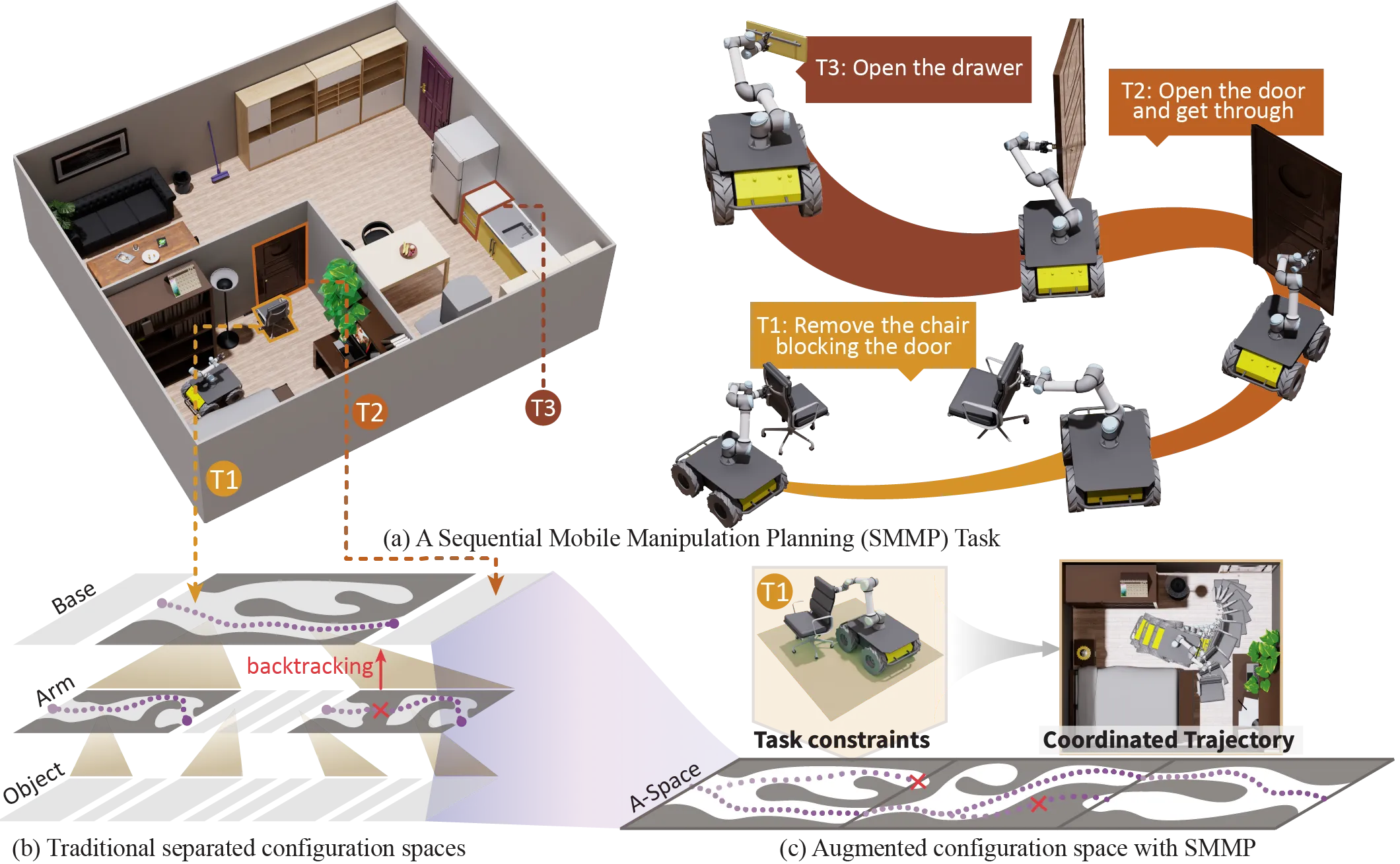

We present a Sequential Mobile Manipulation Planning (SMMP) framework that can solve long-horizon multi-step mobile manipulation tasks with coordinated whole-body motion, even when interacting with articulated objects. By abstracting environmental structures as kinematic models and integrating them with the robot’s kinematics, we construct an Augmented Configuration Apace (A-Space) that unifies the previously separate task constraints for navigation and manipulation, while accounting for the joint reachability of the robot base, arm, and manipulated objects. This integration facilitates efficient planning within a tri-level framework: a task planner generates symbolic action sequences to model the evolution of A-Space, an optimization-based motion planner computes continuous trajectories within A-Space to achieve desired configurations for both the robot and scene elements, and an intermediate plan refinement stage selects action goals that ensure long-horizon feasibility. Our simulation studies first confirm that planning in A-Space achieves an 84.6% higher task success rate compared to baseline methods. Validation on real robotic systems demonstrates fluid mobile manipulation involving (i) seven types of rigid and articulated objects across 17 distinct contexts, and (ii) long-horizon tasks of up to 14 sequential steps. Our results highlight the significance of modeling scene kinematics into planning entities, rather than encoding task-specific constraints, offering a scalable and generalizable approach to complex robotic manipulation.

To demonstrate the generality and applicability of our method across diverse object structures and realistic environments, we conducted an extensive evaluation in cluttered household scenes adopted from iThor. Each scene included articulated objects from the PartNet-Mobility dataset, replacing existing objects of the same category to preserve contextual realism. We used AO-Grasp to generate candidate grasps per object and tested on three new mobile manipulators. To manage the scale and complexity, we ported our AKR-based motion planner to the GPU-accelerated Curobo platform and developed a fully automated environment setup pipeline. A gallery of results is presented below.

The proposed AKR-based mobile manipulation planner supports data generation pipelines such as AutoMoMa and m3bench, facilitating the development of learning-based methods like m2diffuser for mobile manipulation skills.